Home Lab Setup

In this post, I offer an overview of my home lab setup, detailing the hardware and systems I use for testing and development. From Ubiquiti routers to Raspberry Pi clusters, I explain how each component is configured and managed using Ansible for automation. I also walk through my process for bringing new systems online and maintaining a hands-off, streamlined environment.

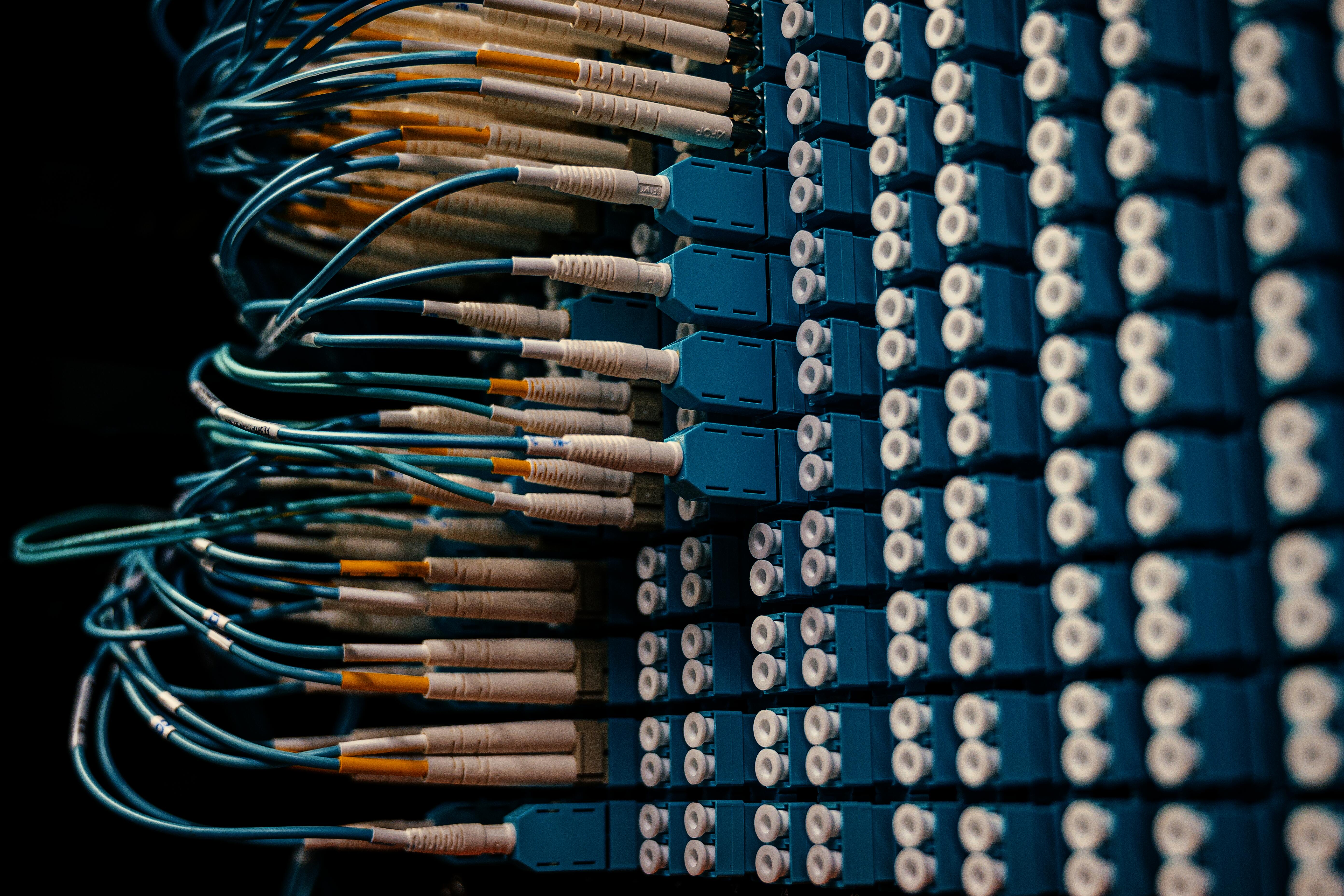

As I’ve hinted in previous posts, my home lab serves as a playground for testing and development, allowing me to experiment with a variety of hardware and configurations. Here’s a peek under the hood at the hardware running the show:

- Ubiquiti RouterAmazon Link

- Cisco Switches - Cisco (Because you can never go wrong with reliability.)

-

Lenovo Tiny Desktops

- Dedicated to Kubernetes Master Nodes, freeing me from relying on my Pi Clusters.

- One system runs the automation systems via HomeAssistant.

- Raspberry Pi CM4 Cluster (6 nodes, 8GB RAM each, NVMe storage)

- DeskPi Super6C Raspberry Pi CM4 Cluster Board

- Raspberry Pi 4 - Solely handling DNS duties.

- Synology NAS - It’s currently hanging around but soon to be retired. All the systems are statically assigned IPv4 addresses, and DHCP/DNS records are maintained using Ansible (Stay tuned—I’ll be releasing the role soon!). Basic configurations—like NTP settings, package installations, updates, and user setups—are also automated with Ansible.

Here’s how I typically bring a new system online:

- Flash the system image (using PI Imager or a custom Debian image with a predefined hostname and user account).

- Boot up and assign a static IP via the router.

- Add the system to the /etc/hosts file on my workstation.

- Update the Ansible inventory.yml to reflect the new system’s role—DNS names only, no IP addresses.

- Use ssh-copy-id to transfer SSH keys to the new system (FQDN all the way).

- Verify SSH is set up and functioning properly.

- Run the necessary Ansible playbooks:

- Master Playbook

- DNS Playbook

By this point, the new system should be ready, configured to the baseline, and potentially even further if its role calls for it (e.g., becoming a Kubernetes worker node). Most of my systems are headless, and I rarely need to interact with them directly. They’re here for testing and workload purposes, so unless I need to tweak a configuration or investigate something, they’re largely hands-off. These servers run themselves, allowing me to focus on the more interesting stuff. Cheers!